We present Co-SLAM, a real-time RGB-D SLAM system based on a neural implicit representation that performs robust camera tracking and high-fidelity surface reconstruction.

Co-SLAM represents the scene as a multi-resolution hash grid to exploit its extremely high convergence speed and ability to represent high-frequency local features. In addition, to incorporate surface coherence priors Co-SLAM adds one-blob encoding, which we show enables powerful scene completion in unobserved areas. Our joint encoding brings the best of both worlds to Co-SLAM: speed, high-fidelity reconstruction, and surface coherence priors; enabling real-time and robust online performance. Moreover, our ray sampling strategy allows Co-SLAM to perform global bundle adjustment over all keyframes instead of requiring keyframe selection to maintain a small number of active keyframes as competing neural SLAM approaches do.

Experimental results show that Co-SLAM runs at 10Hz and achieves state-of-the-art scene reconstruction results and competitive tracking performance in various datasets and benchmarks (ScanNet, TUM, Replica, Synthetic RGBD).

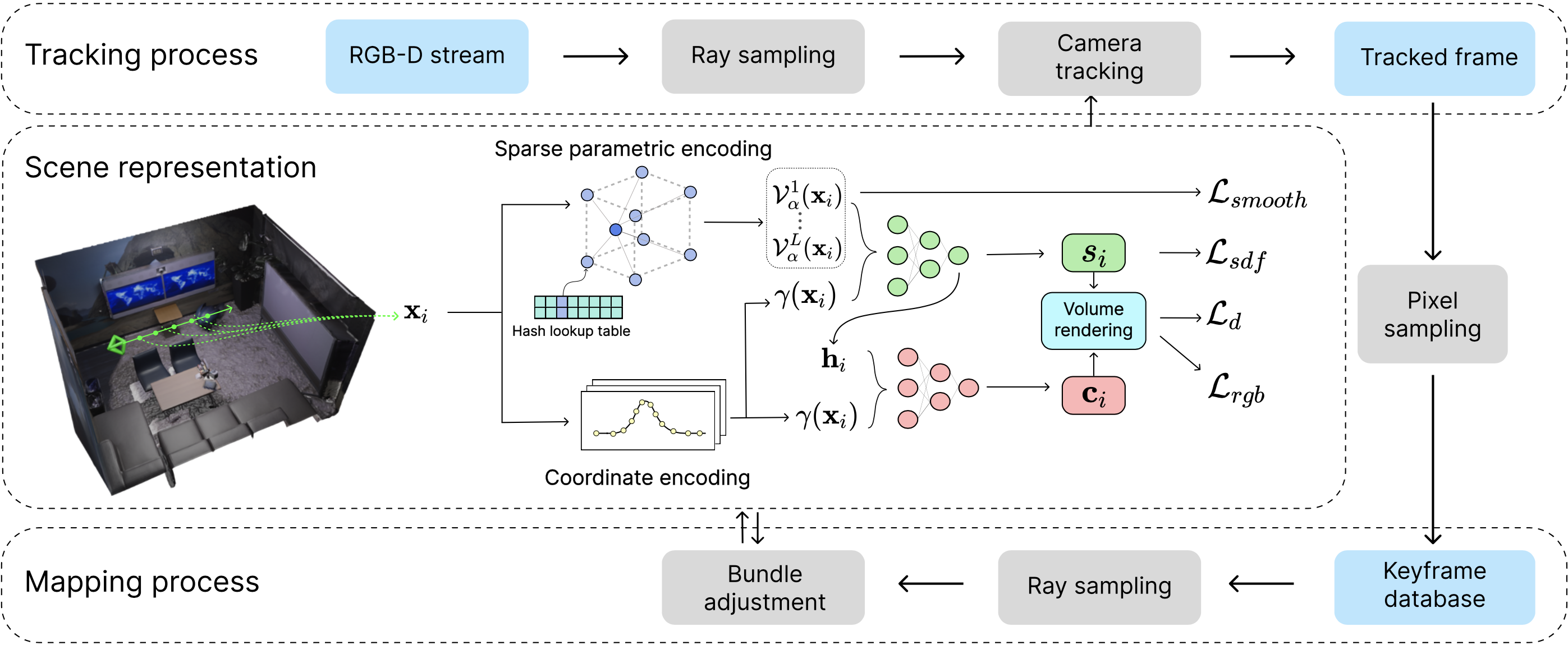

Co-SLAM consists of three major parts: 1) scene representation maps the input position into RGB and SDF values using joint coordinate and sparse parametric encoding with two shallow MLPs. 2) Tracking processing performs camera tracking by minimising our objective functions with respect to trainable camera parameters. 3) Mapping processing uses the selected pixels with tracked poses to perform bundle adjustment that jointly optimising our scene representation as well as the tracked camera poses via minimising our objective functions with respect to scene representation and trainable camera parameters.

@article{wang2023coslam,

title={Co-SLAM: Joint Coordinate and Sparse Parametric Encodings for Neural Real-Time SLAM},

author={Wang, Hengyi and Wang, Jingwen and Agapito, Lourdes},

journal={Proceedings of the IEEE international conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}