|

I am a PhD student at CDT in Foundational AI at University College London, supervised by Prof. Lourdes Agapito. My research topic is Neural Surface Reconstruction from Video. I obtained my Master degree with thesis on "Neural Representations for 3D Reconstruction" at UCL, supervised by Prof. Lourdes Agapito. Prior to UCL, I obtained a bachelor degree at joint degree programme between QMUL and BUPT, worked with Dr. Changejae Oh. Feel free to drop me a message if you would like to know more about my research or just up for a casual chat :) Email / CV / Google Scholar / Linkedin / Github / Notebooks / I am looking for a research internship/visiting position in 2026. Feel free to reach out:) Please note that I might have issues regarding a U.S visa. |

|

|

I am currently working on 3D reconstruction with a special interest in spatial representations. There is an ongoing debate about whether explicit 3D representations are truly needed for tasks such as 3D reconstruction, world modeling, and scene understanding. My view has evolved over time, and at present, I believe they are essential. Humans can reason about the 3D world intuitively, but we cannot precisely measure or reproduce it without tools that enforce physical constraints. My current research aims to understand when and why spatial representations are necessary. |

|

Hengyi Wang, Lourdes Agapito arXiv, 2025 project page / code AMB3R is a multi-view feed-forward model for dense 3D reconstruction on a metric-scale that addresses diverse 3D vision tasks. Notably, AMB3R can be used as Visual Odometry and Structure from Motion with no task-specific fine-tuning or test-time optimization. |

|

Hengyi Wang, Lourdes Agapito 3DV, 2025 (Oral, Best Paper Candidate) project page / arXiv / code / video Spann3R is a transformer-based model that achieves incremental reconstruction from uncalibrated image collection with a simple forward pass. |

|

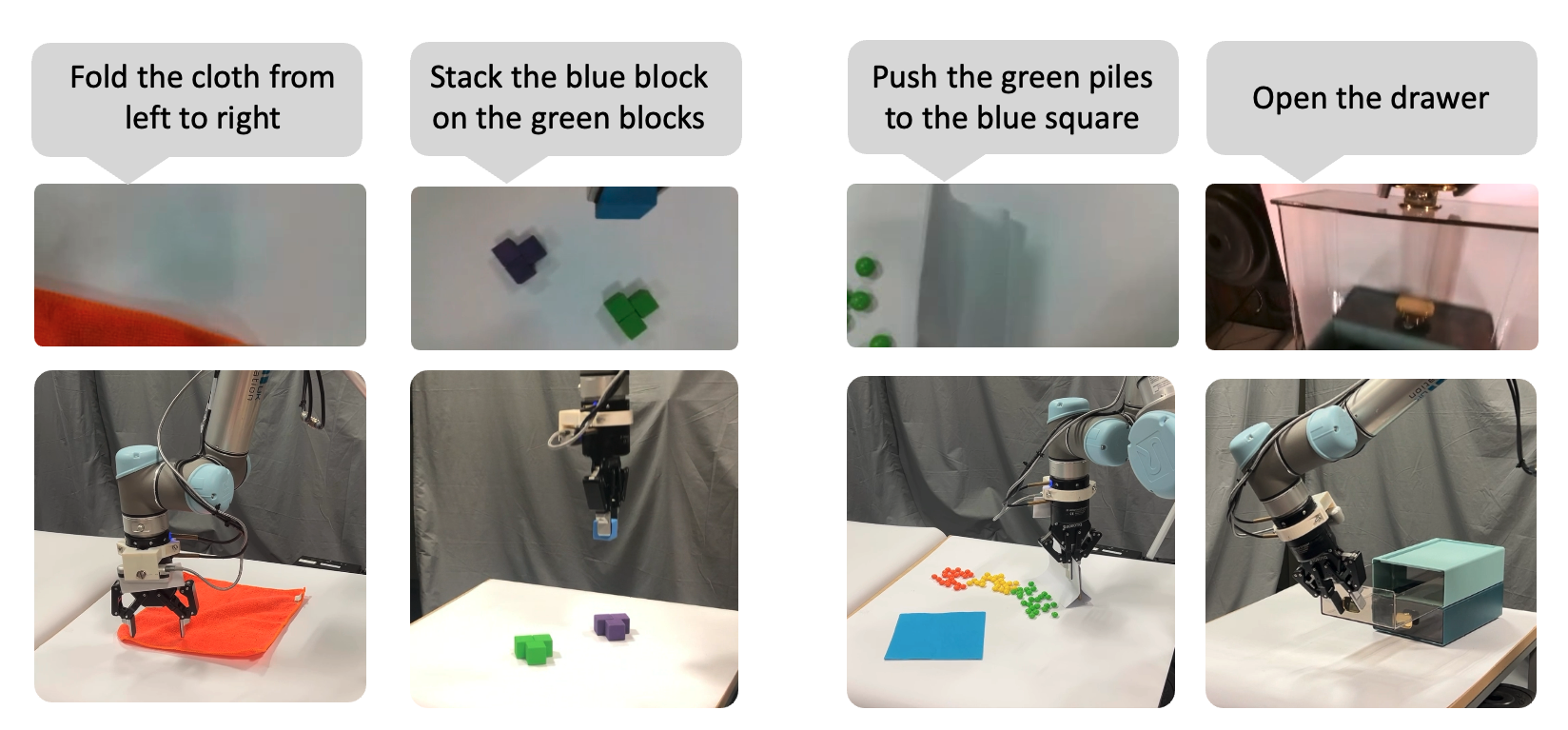

Chaoran Zhu, Hengyi Wang, Yik Lung Pang, Changjae Oh CoRL, 2025 project page / arXiv LaVA-Man is a language-guided robot manipulation system that leverages CroCo-style self-supervised pretext task (goal image prediction) to learn casuality of visual state transitions in language-guided manipulation. |

|

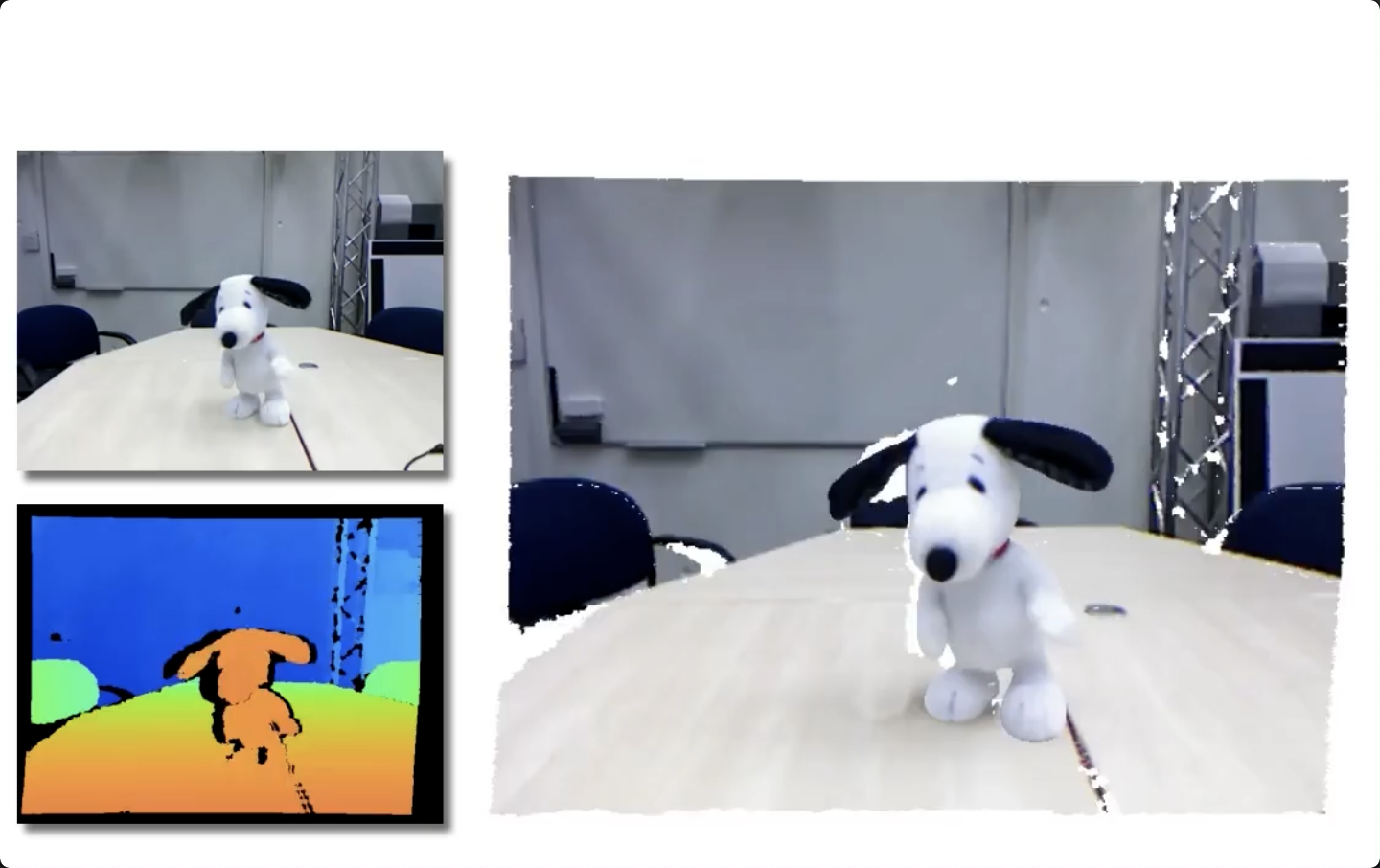

Hengyi Wang, Jingwen Wang, Lourdes Agapito CVPR, 2024 project page / arXiv / code / video MorpheuS is a dynamic scene reconstruction method that leverages neural implicit representations and diffusion priors for achieving 360° reconstruction of a moving object from a monocular RGB-D video. |

|

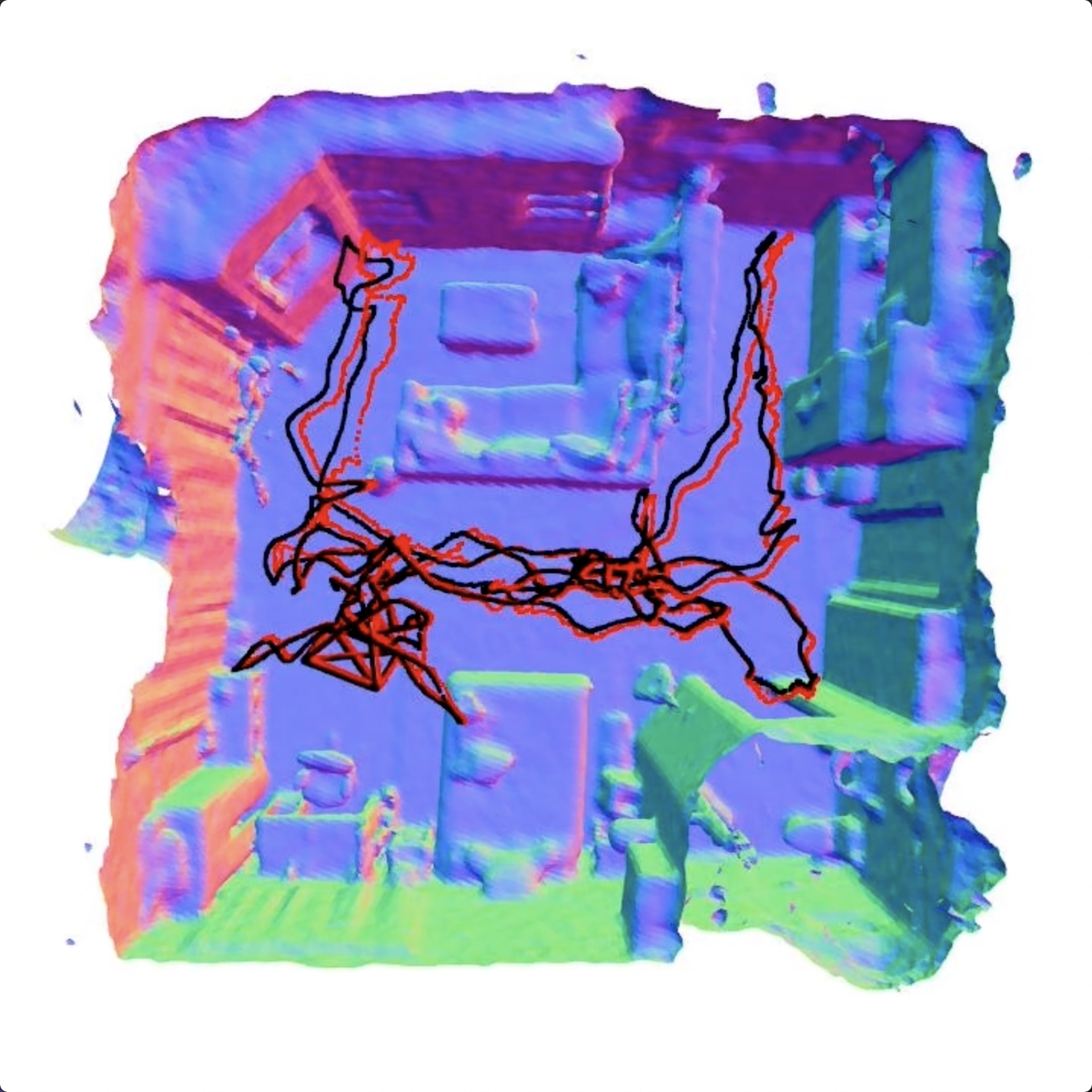

Hengyi Wang, Jingwen Wang, Lourdes Agapito CVPR, 2023 project page / arXiv / code / video Co-SLAM is a neural RGB-D SLAM system that performs robust camera tracking and high-fidelity surface reconstruction in real time. This paper extends the work presented in my MSc thesis. |

|

|

|

|

Master thesis at UCL, 2022 paper / code Built a neural RGB-D surface reconstruction system based on joint coordinate and sparse parametric encoding. |

|

|

|

|

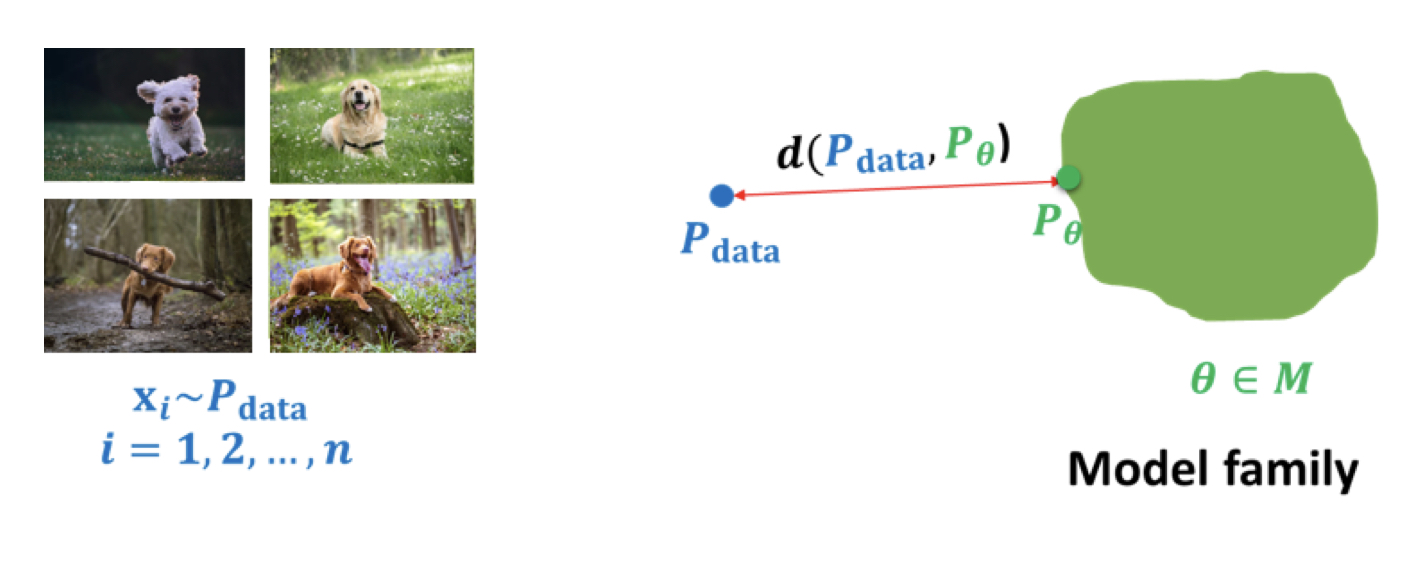

Stefano Ermon, Yang Song, notes |

|

|

|

|

|

|

Thanks Jon Barron for the website. |