Abstract

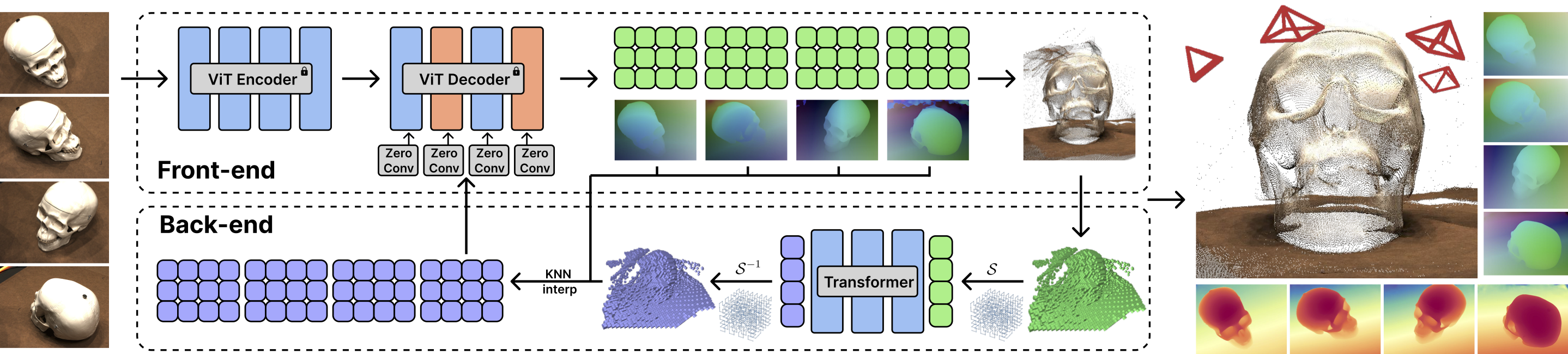

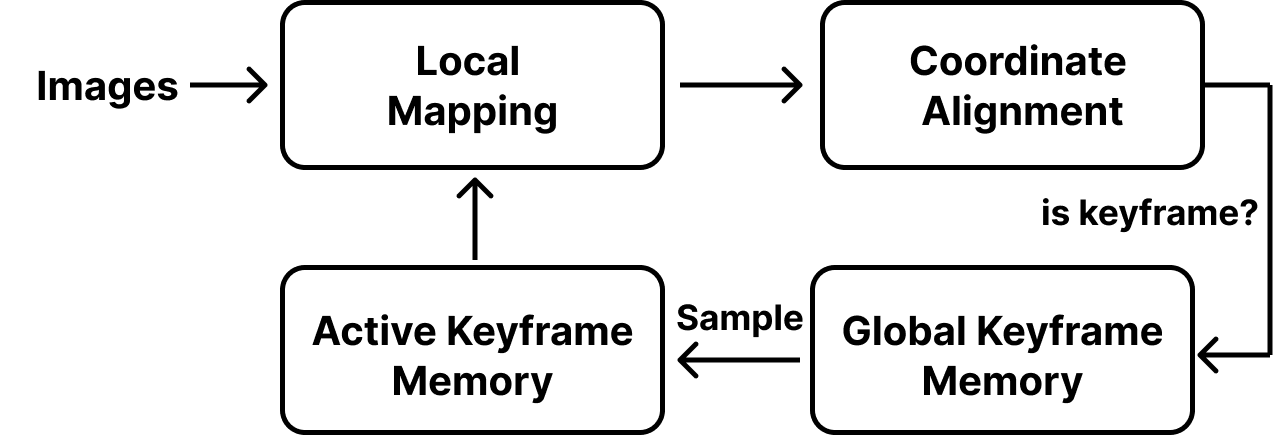

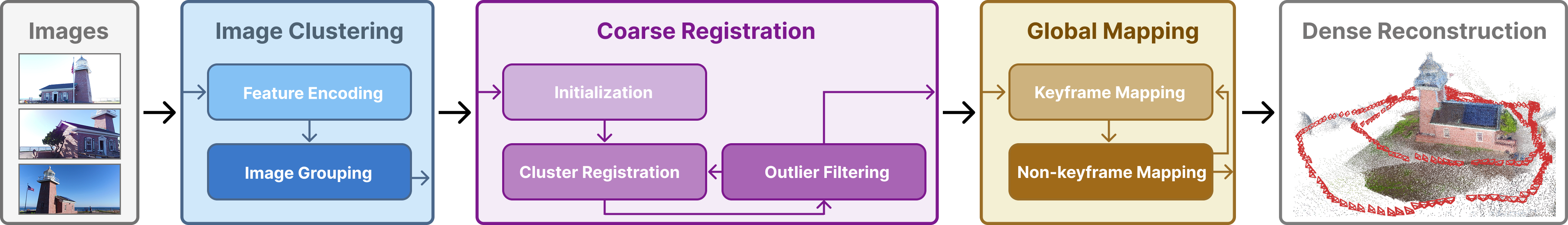

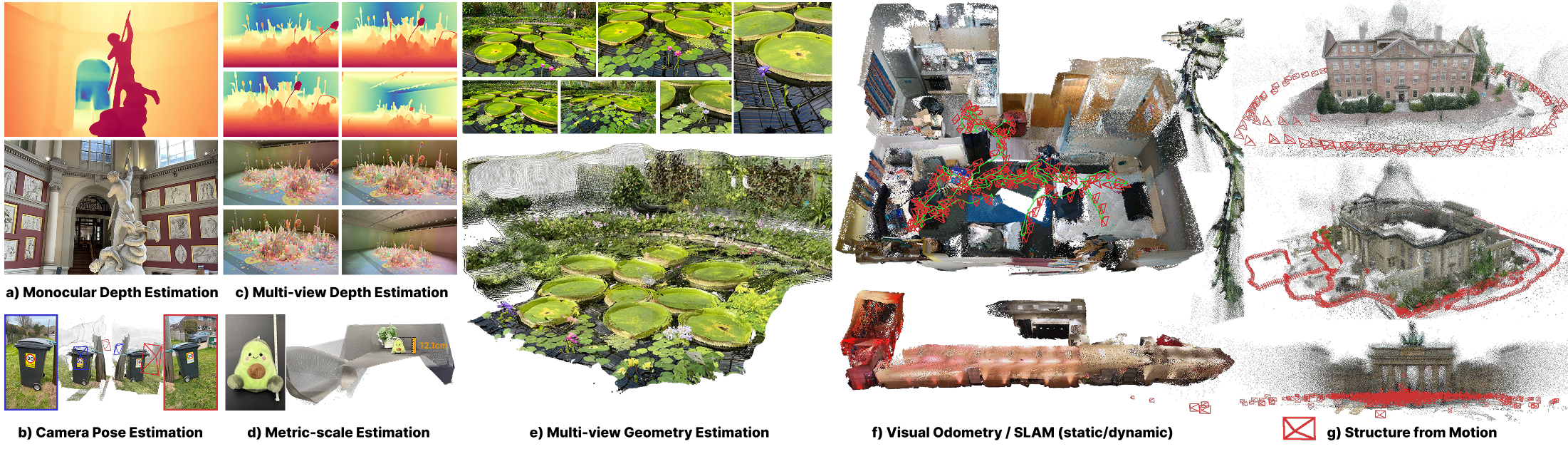

We present AMB3R, a multi-view feed-forward model for dense 3D reconstruction on a metric-scale that addresses diverse 3D vision tasks. The key idea is to leverage a sparse, yet compact, volumetric scene representation as our backend, enabling geometric reasoning with spatial compactness. Although trained solely for multi-view reconstruction, we demonstrate that AMB3R can be seamlessly extended to uncalibrated visual odometry (online) or large-scale structure from motion without the need for task-specific fine-tuning or test-time optimization. Compared to prior pointmap-based models, our approach achieves state-of-the-art performance in camera pose estimation, depth estimation, metric-scale estimation, and 3D reconstruction, and even surpasses optimization-based SLAM and SfM with dense reconstruction priors on common benchmarks.

TL;DR: 1) Spatial representations matter for feed-forward reconstruction; 2) Multi-view transformer by itself can be used as a feed-forward VO/SfM without the need for task-specific fine-tuning or test-time optimization.